Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Discover how advanced prompt chaining empowers LLMs to handle complex, multi-step tasks. Learn methods, tools, real-world use cases, and design best practices.

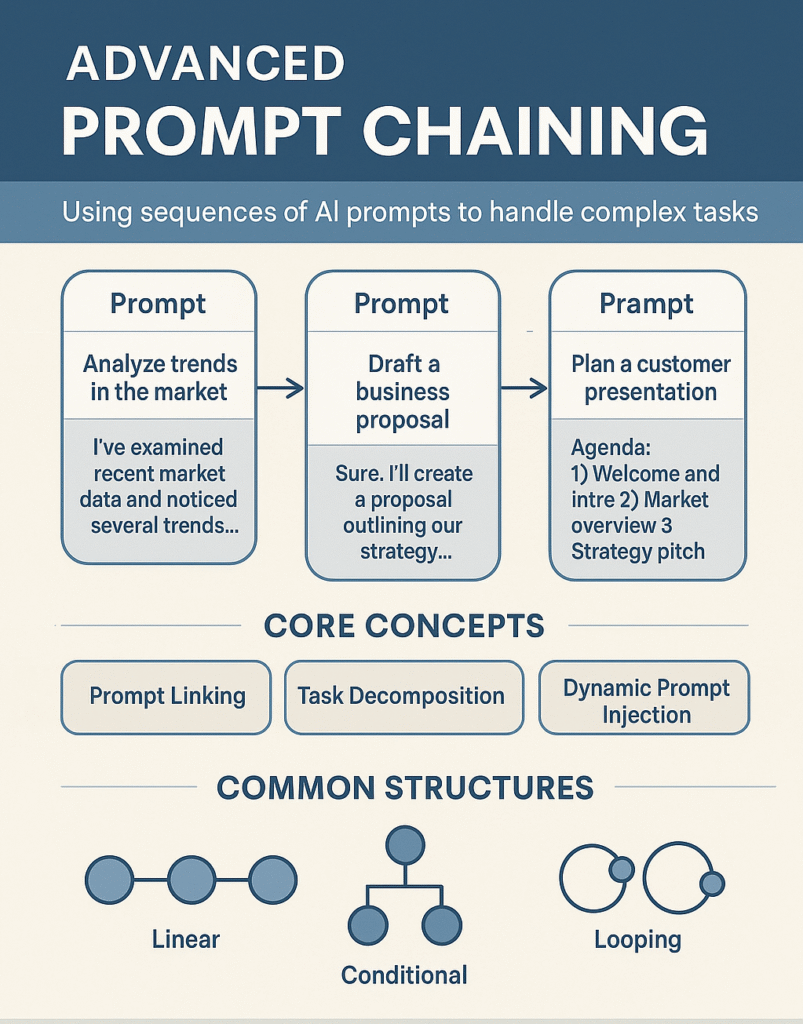

Prompt engineering isn’t just about crafting the perfect single prompt anymore. As we push LLMs like GPT-4, Claude, and LLaMA into increasingly complex tasks, we need Advanced Prompt Chaining — a structured way to connect multiple prompts so AI systems can reason more effectively, step by step.

Advanced prompt chaining fills that gap by sequencing logic, similar to how developers write multi-line code.

| Structure | Description |

|---|---|

| Linear | Sequential prompts, one feeding into the next |

| Conditional | Logic branches based on previous outputs |

| Looping | Repeat prompts until a condition is met |

| Industry | Example Use Case |

|---|---|

| Customer Support | Escalation routing with reply drafting |

| Healthcare | Medical report summarization + diagnosis suggestion |

| Legal | Clause comparison and precedent extraction |

| E-commerce | Product FAQ generation + title optimization |

Chaining enables real-time adaptability and multi-agent collaboration, paving the way for AutoGPT-like workflows.

| Feature | Prompt Chaining | Traditional Code |

|---|---|---|

| Flexibility | High | Medium |

| Ease of Use | Non-technical friendly | Requires coding |

| Maintenance | Dynamic and adaptive | Version-dependent |

Prompt chaining unlocks the true potential of LLMs for multi-step logic. Start chaining to bring smarter AI into your workflows.

| Section | Key Concepts | Examples / Notes |

|---|---|---|

| What is Advanced Prompt Chaining? | Linking multiple prompts to handle complex tasks | AI simulating workflows through chained reasoning |

| Why Use It? | Enhanced reasoning, better accuracy, modular design | Replaces long, error-prone single prompts |

| Core Techniques | Prompt Linking, Task Decomposition, Dynamic Prompt Injection | Feed outputs of one prompt into the next |

| Structures | Linear, Conditional, Looping | Choose based on task complexity |

| Tools & Frameworks | LangChain, Flowise, PromptLayer | For building, testing, and tracking chains |

| Design Tips | Manage tokens, assign roles, pass variables | Keep prompts clear, context-rich |

| Industries | Healthcare, Legal, E-commerce, Support | Real-world AI flows: email writing, diagnosis, contract review |

| Best Practices | Isolate prompt testing, feedback loops, fallback prompts | Use versioning and monitoring |

| Common Challenges | Token drift, latency, hallucination, cost | Optimize with short, focused prompts |

| Real-World Example | Email assistant chaining 3 prompts: summarize > extract > generate | Streamlines communication with context retention |

| Ethical Considerations | Avoid leakage, document logic, maintain transparency | Chain only safe and clean data |

| Comparisons | Prompt chaining vs. traditional scripting | Chaining = flexible and dynamic |

| When Not to Use | Simple tasks or when latency is critical | Avoid chaining if one prompt suffices |

| Future Outlook | Multi-agent AI, autonomous flows, dynamic logic | AutoGPT-like agents using adaptive chains |